Disruptions over this weekend

3 years ago

So just a quick devlog/post mortem of what happened. To background this a bit, it's going to get a little technical. I am also not a Cloud Engineer, I am not a Database admin, I know just enough to be dangerous! I have been a web developer/server developer for a long time and have worked with a lot of different technology over nearly 2 decades, but I am no expert, there is always something to learn.

The main storage for STKP data is a chunky MySQL instance, I manage the config such myself and theres plenty of ways I can and will optimise it as I learn a bit more about the platform!

The Issue

So, a few months back when the older api was having some issues I made some configuration changes based on issues that were being encountered at the time. One of these changes was to increase the innodb_io_capacity flag, increase the innodb_change_buffer_max_size and a reduction of the innodb_buffer_pool_size.

Now, I can't fully remember the circumstances for these changes but these fixed the old issues.

Jump forward a few months to a way too humid evening in the north east of england and suddenly everything is slowing to a crawl.

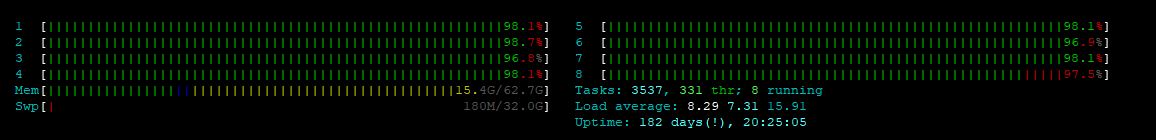

htop is showing all cores nearly at full tilt. This is a decently powerful server for the database, so I fire up the mysql client and see a slowly growing queue of queries in the processlist. These are simple queries with indexes that should be super super fast to execute. Restarting the server alleviates the problem temporarily before it starts again and the query backlog grows.

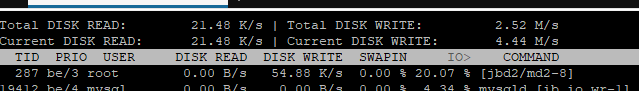

A quick check of iotop shows that a command [jbd2/md2-8] is hammering the disk (image is not representitive of the state at the time, it was far higher!)

I am not an expert on MySQL so I do some digging and several stack overflow tabs later it looks like the bottleneck is this. The io capacity is too large and the disk is unable to finish flush to disk before it receives more data needing to be flushed to disk (at least that is my understanding).

The Fix

So to fix the issue, I increase the innodb_buffer_pool_size again based on some calculations, there is still room to increase this in the future. I remove the change to the innodb_io_capacity back to the server default. Restarting the server and the queries seem to flush through much much faster. Excellent.

I managed to re-enable all the paused services and things have started to respond.

Next Steps

There are still far too many queries being handled for my liking currently, the old API is based on a hand built MVC I have incrementally updated and upgraded over the years and clearly isn't a good fit for the job anymore. Essentially there are multiple queries being called that don't entirely need to be.

APIv2 which is launching with 0.9.0 will improve this by being a stateless rest API written in node/express. This also include custom per-route caching to further improve performance. The plan now is to move much of functionality over from the old API to the new one where viable. This will be an incremental process over the next few weeks to cause as little disruption as possible!

I am also looking at the option of moving to a document storage database as it may suit the project better in the long term but again, my exposure to platforms such as MongoDB and DynamoDB has been limited so I am currently not in a position to decide if it is the best route forward but I am investigating!

Ok, time to keep an eye on things for the rest of the evening. Sorry for any disruption you may have had during flights this evening, hopefully it won't occur again! Thought it would be interesting for some to get this information out and a little look "under the cowling" for STKP.

Cheers! Dan <3